Hypernil Ethics: Moral Dilemmas in New Realities

When Virtual Lives Outweigh Physical World Responsibilities

A player wakes before dawn to answer a virtual call, prioritizing a simulated crisis over breakfast and school runs. Teh scene reveals how social bonds and duties migrate online, testing kinship and civic norms equally.

Ethicists observe these shifts and ask: when obligations span device boundaries, which promises deserve precedence? Practical guidelines must weigh harm, consent and context while avoiding moral absolutism in complex blended lives and institutional reform urgently.

Design choices embed values; platforms nudge attentions into deep, sustained commitments.

| Priority | Indicator |

|---|---|

| Family | Immediate |

A practical ethic accepts hybrid duties while setting fallback rules for physical-world care: thresholds of harm, time limits and escalation paths. Communities must debate fair tradeoffs, ensuring compassion, accountability and shared standards before virtual immersion.

Consent and Identity in Mutable Digital Avatars

A player drifts through a bazaar of selves, swapping features like trinkets and learning that each tweak alters how others treat them. The story proves how slippery ownership of likeness becomes when avatars are mutable and social expectations blur, producing tensions that demand clearer agreements.

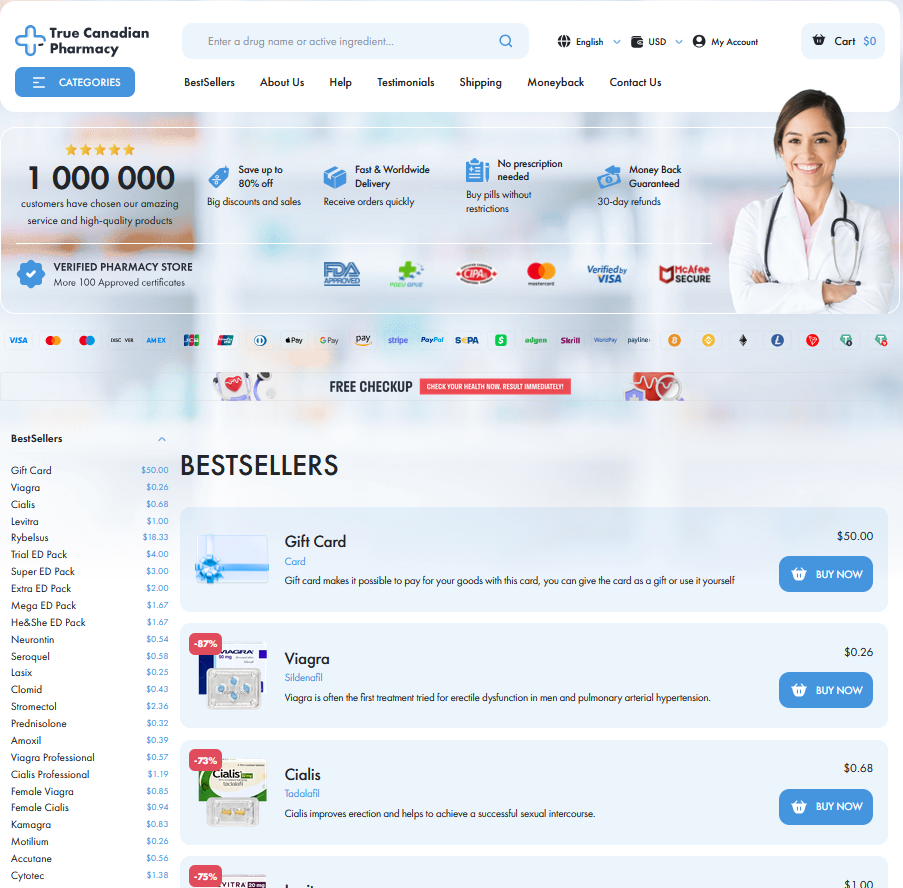

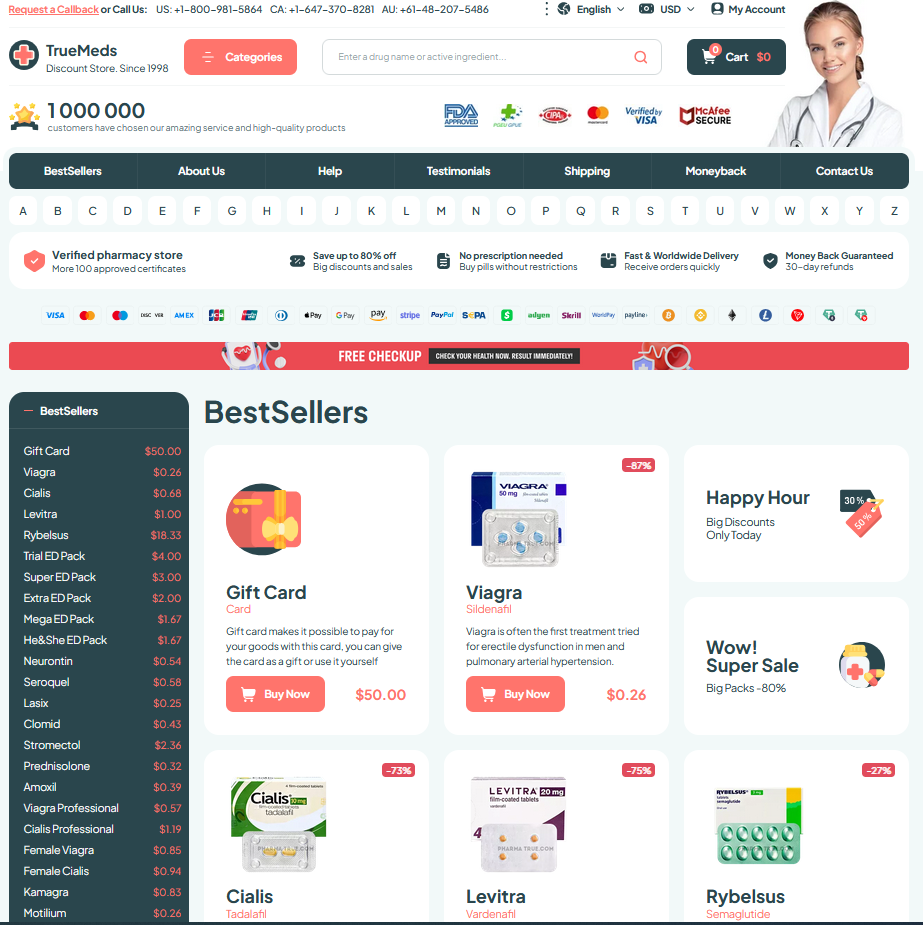

Platforms such as hypernil complicate consent: who authorizes edits, when is identity delegated and how is informed permission recorded? Teh designers must build robust transparent defaults, revocable tokens, auditable logs, and clear remedies so users retain agency across shifting personas and regulators craft adaptable rules.

Algorithmic Judgment Who Decides Moral Scores

In a dimly lit control room, engineers debate whether a silent ledger should rank virtues; hypernil simulations propose empathy as quantifiable data. A familiar human intuition collides with models that flatten context into scores now.

Narratives of fairness clash with data-driven metrics, exposing biases hidden in training sets and the values encoded by programmers. Citizens require literacies and legal recourse to contest scores.

Policymakers must craft transparent audits, public input and appeal pathways so moral scores don't become an unchallengeable decree; otherwise, teh authority shifts from people to opaque systems.

Resource Scarcity in Simulated Ecosystem Ethics

A user watches a digital wetland collapse as water tokens are hoarded, feeling culpable when a child's avatar goes thirsty in a hypernil realm. Storylike tension exposes how scarcity emerges from design choices, economies, and invisible feedback loops that mirror real-world injustice and demand ethical attention.

Designers can embed scarcity-aware protocols: transparent allocation, participatory governance, and simulated commons to reduce harm. Ethical frameworks should guide trade-offs, introduce reparative mechanisms, and monitor distributional outcomes across virtual and physical Enviroment boundaries, with measurable metrics and iterative oversight ensuring fairness, resilience, accountable stewardship.

Cross-reality Obligations Duty Beyond Physical Borders

A friend once abandoned a garden for an online city, and I watched obligations blur; hypernil realms demanded attention, compassion, and new rules everywhere.

We must rethink promises when avatars suffer, because virtual harm can cascade into real lives; communities definately need legal frameworks and moral clarity now.

| Realm | Duty |

|---|---|

| Virtual | Care |

Designers, lawmakers, and citizens must negotiate duties across platforms, balancing enforcement, privacy, and care to acommodate shifting loyalties and shared ecosystems with empathy always.

Designing Moral Ai Programmers as Modern Legislators

A lone programmer sketches ethical constraints for emergent agents, imagining a legislature coded into silicon. They debate values, balancing human rights, transparency, and cultural variance while sprinting against deployment schedules.

They form cross-disciplinary councils with ethicists, engineers, and Goverment liaisons to codify norms, mandate audits, and assign accountability. Standards must adapt to context, not rigid code, to avert harm arbitration.

Education reorients: programmers learn deliberative lawmaking, public engagement, and humility about uncertainty. Iterative simulation tests and transparent rulebooks let citizens contest choices, building legitimacy for machine-made ethics. Hyperreality research AI ethics overview